Powershell and Tax

For the last couple of days, I've been doing my UK tax return for the HMRC. It used to take a lot longer, but in recent years I've been using powershell to help out. It hasn't yet managed to reduce the tax I have to pay, but it gets me the information I need much quicker. And there's a continuous development process happening, whereby some of the new techniques I've picked up during the preceding year get incorporated. (The same sort of thing happens every year with our Christmas e-cards. Each year I think I've got the automation perfected, but the following year, there are always a few tweaks that come to mind as the clock ticks towards midnight on Christmas Eve.)

So this year I've been deepening my knowledge of regular expressions and doing a lot more with REST APIs, both in a powershell context. These have come to my rescue as I try to extract information from my bank statements, related to my author business, charitable giving and whatnot.

The first job is always to merge my bank statements into a single CSV file. Sadly, my bank will only let me download the last 4 months of statements. They used to let me load a complete tax year, but "there's no demand for it" and the function got dropped. So now I download as much as I can, every time I log on to the bank's website, and I use powershell to stitch the individual CSV files together, no omissions, no duplications.

All those bank statements are saved in a folder for the tax year. Each will have 4 months of data, but will certainly overlap, because I pull a fresh statement down whenever I log on to online banking. And I have decided that when I work with the merged file, I want it to run from oldest to newest. So I may - depending on the bank - need to reverse the order of transactions in the CSV file. That ordering has changed over the years I've been doing this, either because I've changed banks, or because for whimsy the bank has decided to change the order.

That initial code looks like:

#

# start-up code omitted, includes setup of $sdir $TaxYear $TaxYearFolder $AccountInfo

$Name = $AccountInfo.AccountNumber

Get-ChildItem $TaxYearFolder -Recurse -Include "*${Name}*.csv" | foreach {

$recordSet = $_.FullName | foreach {

$filename = $_

Write-Host "Import $filename"

$transSub = Get-Content -LiteralPath $filename -Encoding Default |

where {-not [string]::IsNullOrWhiteSpace($_)} |

ConvertFrom-Csv |

Select-Object Date,Type,Description,"Paid in","Paid out",Value,Balance,"Account Name","Account Number",Notes |

where {$_.Date -ne '-'}

$firstDate = [datetime]::Parse($transSub[0].Date)

$lastDate = [datetime]::Parse($transSub[$transSub.Length - 1].Date)

if ($lastDate -lt $firstDate) {

#

# reverse the order of records

for ($ix = $transSub.Length - 1; $ix -ge 0; $ix--) {

$transSub[$ix]

}

}

else {

# preserve the order of records

$transSub

}

}

$startDate = [datetime]::Parse($recordSet[0].Date)

$endDate = [datetime]::Parse($recordSet[$recordSet.Length - 1].Date)

$outputFile = "$sdir\$TaxYear\${Name}_$($startDate.ToString("yyyyMMdd")).csv"

$recordSet | Export-Csv -NoTypeInformation -Encoding UTF8 -LiteralPath $outputFile

}

It's not terribly complicated in itself, but it encapsulates some lessons I've learned that may bear passing on.

That whole sequence

Get-Content ... ConvertFrom-Csvcould have been performed with a simplerImport-Csv. I didn't, because at some point I must have encountered a CSV file which had some blank lines before or after the CSV header line, which broke stuff. I don't control the CSV, so I program defensively, removing all blank lines - that's the[string]::IsNullOrWhiteSpace($_)construction. I do it at the earliest opportunity, before any conversion to CSV has taken place, so that code further down doesn't have to worry about what an empty line looks like after conversion.I've had to deal with a couple of different layouts of CSVs, so - after conversion - I run the

Select-Objectto force a superset of the possible fields. In this case, some variants of the CSV have hadPaid inandPaid outfields, while others have had aValuefield. I've also added aNotesfield, for me to annotate the CSV with, if needed.The

$recordset = <pipeline>construct is typically used to build up an array of objects - in this case from the$transSub(Transaction Subset) records. In the case where the elements are reversed, the code emits a succession of elements (objects). In the case where the elements are already correctly ordered, a single object of type array is emitted. powershell sorts it out so that the end result is the same:$recordsetcontains an array of objects.$recordsetgets written to an intermediate file, also a CSV. I could have simply piped the output from theforeachinto anotherforeach {}pipeline element to sort/merge records, but writing to intermediate files gives me control over the naming of the files, which makes them easy to pre-sort by start date, and which simplifies the merge step. It also eliminates the issue of handling either a single array object containing all the rows or a stream of individual row objects. Finally it makes maintenance easier, because I can inspect the intermediate files.A couple of places I've used the

-Encodingparameter. CSV files that I generate are always explicitly created usingUTF8encoding. This has generally eliminated character set issues, particularly when importing into Excel, which can sometimes mangle characters. Using-Encoding Defaulton input guides the import process to handle the Windows 125x and ISO-8859 encodings correctly

While the merging might be of interest to some, there's nothing more sophisticated to it than importing those intermediate files, from oldest start date to newest, and skipping any records older than the most recent date in memory.

Where it gets interesting again is when I'm checking the transaction descriptions for specific payees, and matching them against a long list of known names and their category, such as Gift Aid, or Pension company, or my author business. This is where Regular Expressions enter the picture.

Regular Expressions are a bit of a 'marmite' topic, some developers love 'em, others hate 'em. I belong in the former camp. But I can understand the hatred - working out how someone else's regex works can be painful. So writing them in a way that can be tested and understood by a future maintainer (who might be your future self) is a Good Thing.

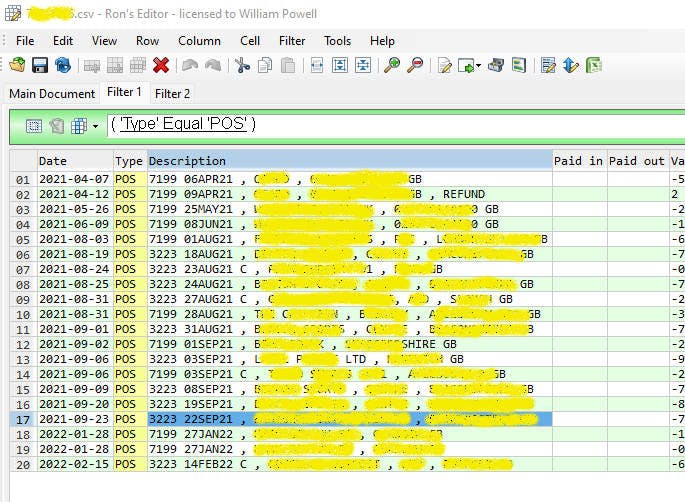

Here is an extract of some of my transactions:

and I'd like to extract the payee, along with the card info and the date at the start of the line.

Let's start with the card info and a basic regex:

$CardRegex = "[0-9][0-9][0-9][0-9]"

We can simplify that as

$CardRegex = "\d{4}"

which means exactly 4 decimal digits. Let's see how we'd use that to analyse the description to extract the card digits.

if ($row.Description -match "^(\d{4}).*$") {

$CardDigits = $Matches[1] # whatever is between the first pair of ()

}

$Matches is provided, and it contains the whole string in $Matches[0], then any submatches as identified by matching parentheses in subsequent elements. But there's another technique we can add in here, which is to name the matches.

if ($row.Description -match "^(?<carddigits>\d{4}).*$") {

$CardDigits = $Matches.carddigits # a named match

}

But that regex is already starting to look unwieldy. Let's break it out:

$CardRegex = "(?<carddigits>\d{4})"

if ($row.Description -match "^${CardRegex}.*$") {

$CardDigits = $Matches.carddigits # a named match

}

For the sake of clarity, I've switched to using the ${CardRegex} form for variable references rather than $CardRegex. Both are correct and equivalent, but when a variable is immediately followed by further alphanumeric characters (as is common in regex work) having a } character at the end is necessary.

The next piece is to handle that one case with the trailing ' , REFUND'. It may or may not be present, so we allow counts of 0 and 1:

$CardRegex = "(?<carddigits>\d{4})"

$RefundRegex = "(?<refund>\s*,\s*REFUND){0,1}" # allow 0 or 1 matches

if ($row.Description -match "^${CardRegex}.*?${RefundRegex}$") {

$CardDigits = $Matches.carddigits # a named match

$RefundPresent = $null -ne $Matches.refund # null or a named match

}

As well as the $RefundRegex I've made a small, but significant change to the 'match anything' regex in the middle, which was .* but which I've changed to .*? which means 'match anything - but as short as possible'. That ensures that if the refund text is present, it will be matched. Without that ? the regex could get greedy, take in the refund text and still match the zero count option of $RefundRegex.

Now we can build out a regex for the date field that follows the card digits. As always, we start with small pieces that can be tested easily, before combining them:

$AlphaMonthRegex = "(?<amon>JAN|FEB|MAR|APR|MAY|JUN|JUL|AUG|SEP|OCT|NOV|DEC)"

$DayOfMonthRegex = "(?<dom>\d{2})"

$YYearRegex = "(?<yy>\d{2})"

$ADateRegex = "(?<adate>${DayOfMonthRegex}${AlphaMonthRegex}${YYearRegex})"

$TrailCRegex = "(?<trailc>(\sC){0,1})" # space + C, 0 or 1 times

$ADateRegex = $ADateRegex + $TrailCRegex

$CardRegex = "(?<carddigits>\d{4})"

$RefundRegex = "(?<refund>\s*,\s*REFUND){0,1}" # allow 0 or 1 matches

$AllRegex = "^${CardRegex}\s+${ADateRegex}.*?${RefundRegex}$"

if ($row.Description -match $AllRegex) {

$CardDigits = $Matches.carddigits # a named match

$RefundPresent = $null -ne $Matches.refund # null or a named match

$AlphaDate = $Matches.adate # or whatever we need

$TrailCPresent = -not [string]::IsNullOrWhiteSpace($Matches.trailc)

}

Note that the regex has been broken out into the variable $AllRegex, for readability.

Also note that I've used a slightly different regex form for $TrailCRegex compared to $RefundRegex - see if you can spot the difference. Both work, but the value in $Matches.trailc is either a zero length string or the string ' C', whereas the value in $Matches.refund is either $null or ' , REFUND'. You can see how I've coded the tests differently to accommodate the difference. There are usually a few ways to accomplish a given task in powershell...

Nearly there. Let's get the middle piece, the actual payee information. We want to exclude the leading comma separator, and any leading or trailing whitespace \s, so that the payee begins and ends with non-space characters \S.

$PayeeRegex = "(?<payee>\S.*?\S)" # match as little as possible

$AlphaMonthRegex = "(?<amon>JAN|FEB|MAR|APR|MAY|JUN|JUL|AUG|SEP|OCT|NOV|DEC)"

$DayOfMonthRegex = "(?<dom>\d{2})"

$YYearRegex = "(?<yy>\d{2})"

$ADateRegex = "(?<adate>${DayOfMonthRegex}${AlphaMonthRegex}${YYearRegex})"

$TrailCRegex = "(?<trailc>(\sC){0,1})"

$ADateRegex = $ADateRegex + $TrailCRegex

$CardRegex = "(?<carddigits>\d{4})"

$RefundRegex = "(?<refund>\s*,\s*REFUND){0,1}" # allow 0 or 1 matches

$AllRegex =

"^${CardRegex}\s+${ADateRegex}\s*,\s*${PayeeRegex}\s*${RefundRegex}$"

if ($row.Description -match $AllRegex) {

$CardDigits = $Matches.carddigits # a named match

$RefundPresent = $null -ne $Matches.refund # null or a named match

$AlphaDate = $Matches.adate # or whatever we need

$TrailCPresent = -not [string]::IsNullOrWhiteSpace($Matches.trailc)

$Payee = $Matches.payee # further processing...

$PayeeStart = $Payee -replace ",.*",'' # up to 1st comma

$PayeeLines = $Payee -split "\s*,\s*" # multi-line version

}

And there we go. \s+ catches mandatory whitespace, \s* catches optional whitespace. That $Payee -split "\s*,\s*" construction is used to split the payee section into an array, splitting at internal commas. The -split operator takes a regex, which mops up any leading or trailing space around the commas, leaving the array elements without leading or trailing space.

I hope this has helped illuminate the power of Regular Expressions in powershell, and provided some pointers on how to write maintainable Regular Expression code in general.

I intend to follow up in a future post on using REST from powershell, as well as Alternate Data Streams on NTFS.